Useful Python Scripts: Difference between revisions

import>Am592 |

import>Jss43 No edit summary |

||

| (2 intermediate revisions by 2 users not shown) | |||

| Line 5: | Line 5: | ||

This [[Media:pdb2xleap.txt|script]] takes a filename as command line argument reads the respective .pdb file and converts it to an xleap |

This [[Media:pdb2xleap.txt|script]] takes a filename as command line argument reads the respective .pdb file and converts it to an xleap |

||

readable format. The initial file is overwritten, but the original file is retained in filename.orig. |

readable format. The initial file is overwritten, but the original file is retained in filename.orig. |

||

<pre> |

<pre> |

||

#!/usr/bin/env python |

#!/usr/bin/env python |

||

| Line 90: | Line 89: | ||

to produce all the graphs in one shot. (Note ~/local/bin is on my path.) |

to produce all the graphs in one shot. (Note ~/local/bin is on my path.) |

||

If you are using python 2.4, James suggests adding the following lines to the get_dependencies.py script: |

If you are using python 2.4, James suggests adding the following lines to the get_dependencies.py script, after the ''import re,sys'' line: |

||

<pre> |

|||

import re,sys |

|||

# 2.4 compatibility: |

# 2.4 compatibility: |

||

try: |

try: |

||

| Line 98: | Line 96: | ||

except NameError: |

except NameError: |

||

from sets import Set as set |

from sets import Set as set |

||

</pre> |

|||

This addition doesn't cause any breakage if run using a later version of python. |

|||

== Monitoring and interacting with jobs on compute nodes == |

|||

It is useful to be able to monitor and interact with running jobs after a certain amount of time has elapsed. This is straight-forward to do with the [https://subtrac.sara.nl/oss/pbs_python python_pbs module], which provides easy access to the Torque API. |

|||

[[Media:watchdog.txt|watchdog.py]] is a script which, when run at the beginning of a submit script, will sit in the background until a set amount of time before the walltime expires at which point it will run the job_cleanup() function (which is contained within watchdog.py). |

|||

The Alavi Group use job_cleanup to create a file called SOFTEXIT in the working directory of the calculation: our code monitors for the existence of this file when doing iterative processes and exits cleanly if it exists. This allows us to smoothly exit from the calculation and produce a restart file if the desired number of iterations has been reached but the walltime has been. The job_cleanup() function should be modified accordingly for other uses. |

|||

<pre> |

|||

$ watchdog.py |

|||

usage: Monitor a running job and run a cleanup function when the elapsed time gets to within a specified amount of the walltime allowed for the job. |

|||

Usage: |

|||

watchdog.py [options] job_id & |

|||

This should be called from your PBS script, so that watchdog is automatically running in the correct working directory. |

|||

job_id needs to be the full job id, as used internally by torque (e.g. 47345.master.beowulf.cluster on tardis). |

|||

Care must be taken that the watchdog is terminated when the job finishes (rather than PBS waiting for the watchdog to finish!). |

|||

watchdog does, however, listen out for the interrupt signal. Recommended use in a PBS script is: |

|||

watchdog.py [options] $PBS_JOBID & |

|||

[Job commands] |

|||

killall -2 watchdog.py |

|||

options: |

|||

-h, --help show this help message and exit |

|||

-e EXIT_TIME, --exit-time=EXIT_TIME |

|||

Amount of time (in seconds) before the walltime runs |

|||

out at which the job_cleanup function is called to |

|||

terminate the job. Default=900s. |

|||

</pre> |

|||

Please note that the killall command is vital so that the job exits cleanly if the calculation finishes well before the end of the walltime, rather than waiting for watchdog.py to wake up. |

|||

python_pbs is not installed on the servers, but it is easy to install locally in the standard "''./configure; make; make install''"-type manner. The documentation is a little patchy, but it comes with some very useful example scripts. |

|||

Latest revision as of 13:11, 7 October 2008

On this script you will find (and can add!) Python scripts, that may be useful in any work-related way.

Converting .pdb files from vmd to xleap readable format

This script takes a filename as command line argument reads the respective .pdb file and converts it to an xleap readable format. The initial file is overwritten, but the original file is retained in filename.orig.

#!/usr/bin/env python # -*- coding: latin1 -*- import sys def main(): fname = sys.argv[1] fin = open( fname, "r") lines = fin.readlines() fin.close() lin2=[] foumod=open( fname+".orig","w") fomod=open( fname,"w") counter=1 first=True oldnum="" for l in lines: if l.find( "CRYST")==-1: l2=l.replace( " X ", " ") if len( l2)>16: if l2[13].isdigit(): subs=l2[12:16] subs2=l2[13:17] l2=l2.replace( subs, subs2) if (not first) and len( l2)>25: if oldnum!=l2[25]: oldnum=l2[25] print oldnum lin2.append( "TER\n") counter+=1 first=False lin2.append( l2) else: counter-=1 lin2.insert(-1,"TER\n") for i in range( counter): lines.append( "") for l,l2 in zip( lines, lin2): foumod.write( l) fomod.write( l2) if __name__ == "__main__": main()

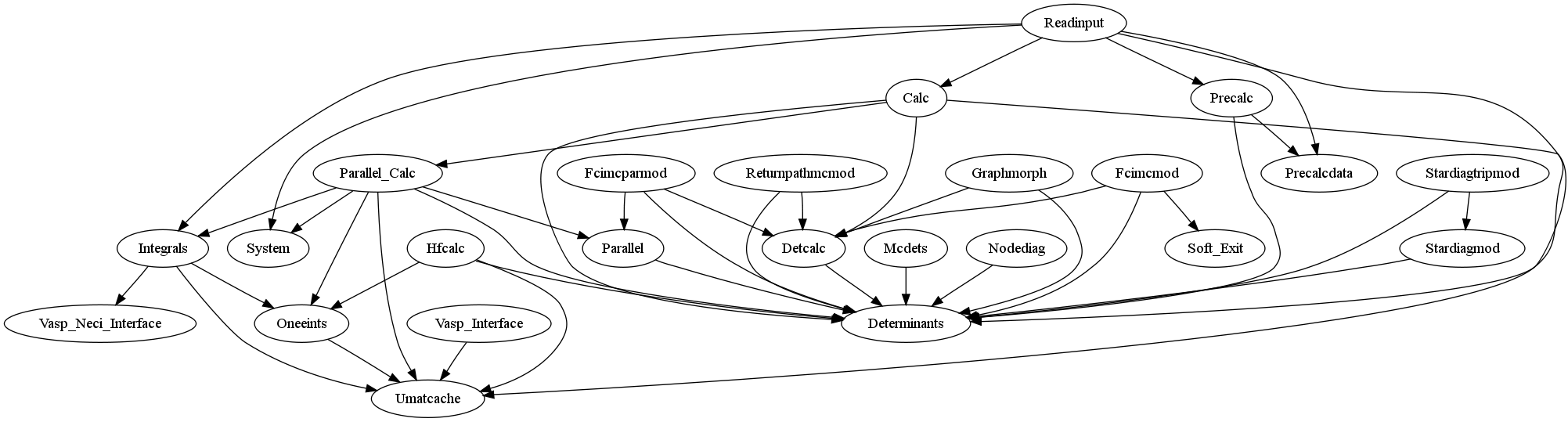

Fortran module dependencies

It is useful to be able to view the dependencies on modules in a fortran code graphically. This is especially helpful when trying to reorder code for a cleaner flow, trying to remove issues leading to circular dependencies, or for becoming familiar with the structure of a new code.

get_dependencies.py is a script which analyses source code and outputs (in the dot language) the dependencies on modules. The output contains the following information:

- modules depending on other modules.

- non-modules files depending on modules.

These are further split up into dependencies involving utilty or data modules (which must be specified inside get_dependencies.py). Graphs of the dependencies can be produced using a suitable parser.

Usage:

get_dependencies.py [source files]

The user needs to do modify a few things before first use:

- Give the names of your data and utility modules and the output directory in the appropriate place in get_dependencies.py.

- Create the output directory.

- Run get_dependencies on your source code. I do:

~/src $ dependencies/get_dependencies.py *.F{,90}

as all our source files are either .F or .F90 files.

Producing graphs from the output requires graphviz. This is not installed in the sector, but is easy to compile.

Run the desired parser (e.g. one of those provided by graphviz) on the resultant dot files. I find dot works best for our graphs. I run:

~/src/dependencies $ for f in *.dot; do dot -Tps $f -o `basename $f .dot`.ps; done

to produce all the graphs in one shot. (Note ~/local/bin is on my path.)

If you are using python 2.4, James suggests adding the following lines to the get_dependencies.py script, after the import re,sys line:

# 2.4 compatibility:

try:

set(['abc'])

except NameError:

from sets import Set as set

This addition doesn't cause any breakage if run using a later version of python.

Monitoring and interacting with jobs on compute nodes

It is useful to be able to monitor and interact with running jobs after a certain amount of time has elapsed. This is straight-forward to do with the python_pbs module, which provides easy access to the Torque API.

watchdog.py is a script which, when run at the beginning of a submit script, will sit in the background until a set amount of time before the walltime expires at which point it will run the job_cleanup() function (which is contained within watchdog.py).

The Alavi Group use job_cleanup to create a file called SOFTEXIT in the working directory of the calculation: our code monitors for the existence of this file when doing iterative processes and exits cleanly if it exists. This allows us to smoothly exit from the calculation and produce a restart file if the desired number of iterations has been reached but the walltime has been. The job_cleanup() function should be modified accordingly for other uses.

$ watchdog.py

usage: Monitor a running job and run a cleanup function when the elapsed time gets to within a specified amount of the walltime allowed for the job.

Usage:

watchdog.py [options] job_id &

This should be called from your PBS script, so that watchdog is automatically running in the correct working directory.

job_id needs to be the full job id, as used internally by torque (e.g. 47345.master.beowulf.cluster on tardis).

Care must be taken that the watchdog is terminated when the job finishes (rather than PBS waiting for the watchdog to finish!).

watchdog does, however, listen out for the interrupt signal. Recommended use in a PBS script is:

watchdog.py [options] $PBS_JOBID &

[Job commands]

killall -2 watchdog.py

options:

-h, --help show this help message and exit

-e EXIT_TIME, --exit-time=EXIT_TIME

Amount of time (in seconds) before the walltime runs

out at which the job_cleanup function is called to

terminate the job. Default=900s.

Please note that the killall command is vital so that the job exits cleanly if the calculation finishes well before the end of the walltime, rather than waiting for watchdog.py to wake up.

python_pbs is not installed on the servers, but it is easy to install locally in the standard "./configure; make; make install"-type manner. The documentation is a little patchy, but it comes with some very useful example scripts.